Image sensors measure light intensity, but angle, spectrum, and other aspects of light must be extracted to significantly advance machine vision.

In Applied Physics Letters, researchers at the University of Wisconsin-Madison, Washington University in St. Louis, and OmniVision Technologies highlight nanostructured components integrated on image sensor chips most likely to impact multimodal imaging.

The developments could enable biomedical imaging to detect abnormalities at different tissue depths, autonomous vehicles to see around corners, and telescopes to see through interstellar dust.

“Image sensors will gradually undergo a transition to become the ideal artificial eyes of machines,” co-author Yurui Qu, from the University of Wisconsin-Madison, writes. “An evolution leveraging the remarkable achievement of existing imaging sensors is likely to generate more immediate impacts.”

Image sensors, which convert light into electrical signals, are composed of millions of pixels on a single chip. The challenge is how to combine and miniaturize multifunctional components as part of the sensor.

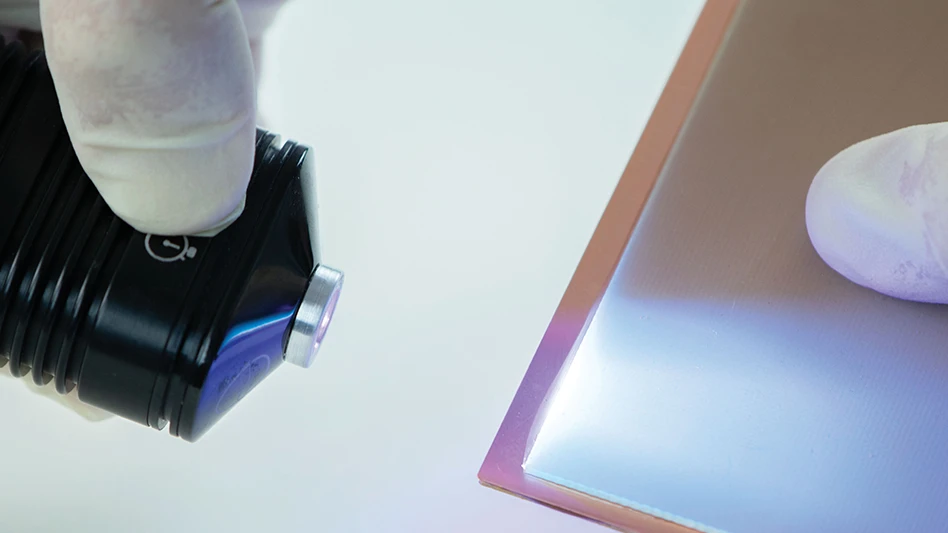

The researchers detail a promising approach to detect multiple-band spectra by fabricating an on-chip spectrometer. They deposit silicon photonic crystal filters on top of the pixels to create complex interactions between incident light and the sensor. The pixels beneath the films record distribution of light energy from which light spectral information can be inferred. The device – less than a hundredth of a square inch in size – is programmable to meet various ranges, resolution levels, and almost any spectral regime from visible to infrared.

The researchers built a component that detects angular information to measure depth and construct 3D shapes at subcellular scales. Their work was inspired by directional hearing sensors found in animals, such as geckos, whose heads are too small to determine where sound is coming from in the same way humans and other animals can. Instead, they use coupled eardrums to measure the direction of sound within a size that’s orders of magnitude smaller than the corresponding acoustic wavelength.

Similarly, pairs of silicon nanowires were constructed as resonators to support optical resonance. The optical energy stored in two resonators is sensitive to the incident angle. The wire closest to the light sends the strongest current. By comparing the strongest and weakest currents from both wires, the angle of incoming lightwaves can be determined. Millions of nanowires can be placed on a 1mm² chip.

The research could support advances in lensless cameras, augmented reality (AR), and robotic vision.

University of Wisconsin-Madison

https://www.wisc.edu

Washington University (St Louis)

https://wustl.edu

OmniVision Technologies

https://www.ovt.com

Explore the September 2022 Issue

Check out more from this issue and find your next story to read.

Latest from Today's Medical Developments

- Kistler offers service for piezoelectric force sensors and measuring chains

- Creaform’s Pro version of Scan-to-CAD Application Module

- Humanoid robots to become the next US-China battleground

- Air Turbine Technology’s Air Turbine Spindles 601 Series

- Copper nanoparticles could reduce infection risk of implanted medical device

- Renishaw's TEMPUS technology, RenAM 500 metal AM system

- #52 - Manufacturing Matters - Fall 2024 Aerospace Industry Outlook with Richard Aboulafia

- Tariffs threaten small business growth, increase costs across industries